Cleanroom News, Cleanrooms, Internet of Things

Optical Shifts – Could Holographic Technology be Leveraged within a Cleanroom Environment?

In recent months we’ve written a lot about cars and automotive technology. From Tesla’s self-driving autos to Google’s investment in flying cars, we’ve delved into the intersection between technological advancement and cleanroom use to highlight the innovative ways in which the industry is incorporating our contamination control products and methodologies into their technologies. And it’s clear we live in exciting times. But it’s also true that some of these innovations will be confined – at least for the foreseeable future – to the upper end of the consumer spectrum. With Tesla’s semi-autonomous Model S boasting an MSRP north of $70K (base model, customizations extra), the semi-autonomy is unlikely to be within the grasp of every driver. So is it true that the current pace of innovation is creating a divide? Are we seeing two different classes of driver emerging – those with smart cars and those woefully without? In a time when the use of vehicular artificial intelligence makes our drives safer, more comfortable, and more pleasurable, what can those of us with mid-range budgets hope to see in our rides? With Honda’s new network of collaborations and partnerships, the answer is maybe more than we’ve come to expect…

In an era of creeping US isolationism, Honda Motor Co., originally founded in Hamamatsu, Japan in 1946, is moving in entirely the opposite direction, embracing a commercial and competitive worldview of increasingly open collaboration across multi-national lines. Manufacturing a broad range of products – from cars to robots, mountain bikes to snowblowers – Honda is in a state of reinvention, pivoting to access emerging niches and leveraging an accepted and trusted brand to venture into new markets.

And nowhere is this more evident than in its somewhat secretive facilities tucked away in Mountain View, the beating heart of California’s Silicon Valley. In the 35,000 square-foot office park of the Xcelerator program, Honda veteran Naoki Sugimoto directs a plethora of projects in collaboration with partners as diverse as Waymo (the semi-autonomous car project of Google’s parent company, Alphabet Inc.) and the sci-fi referential Leia 3D, creators of interactive holographic displays.(1) And, in contrast to Tesla, Honda’s focus is not upon self-driving capabilities for vehicles per se but rather upon creating an artificially-intelligent driving experience for regularly operated cars.(2) Its partnership with Leia 3D is a case in point.

Founded in 2013, this Silicon Valley start-up set its corporate sights on creating software for mobile applications – think Internet of Things, wearable technologies, smartphones and devices. But this is not about app-based games or music streaming or photo sharing. The main industry silos for this new tech are the medical field and – given Honda’s interest – the automotive industry.

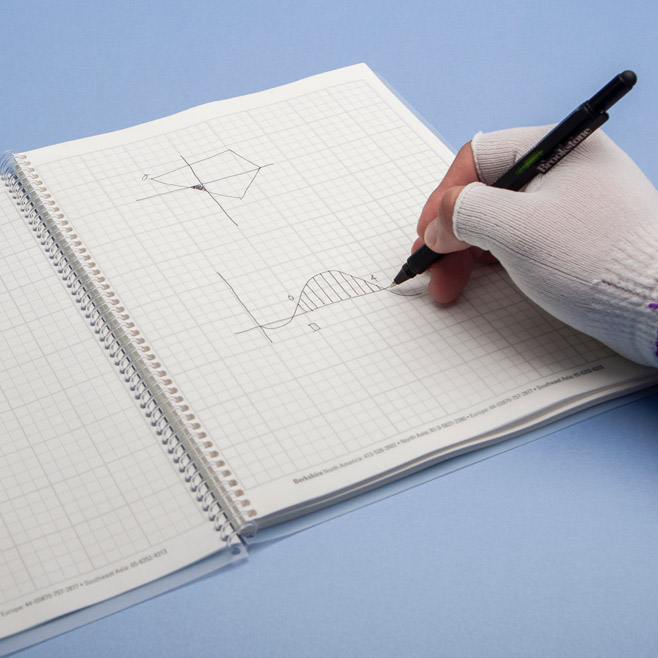

What Leia 3D is looking to do is to leverage its photonics-based technology to create interactive holographic displays. Born of research conducted at HP Labs in Palo Alto into the development of a multiview backlight, Leia’s display – the Diffractive Lightfield Backlighting (DLB™) solution – is, as the name suggests, a diffraction-based system that can project up to 64 images into different spatial directions. And the key to this unique application is the way the display is illuminated. Let’s explain…

In DLB™, a regular LED front panel is used with the light extracted from a surface lightguide being directed at the viewer in narrow beams of differing intensities. These light beams are pushed out from a backlight via diffraction patterns that force the beams into specific angles with controlled angular distribution. When these beams converge they create a seamless 3D impression of the projected object, and the rendering works regardless of the viewer’s head motion, angle of viewing, field of vision, and so forth. And this is a major breakthrough, as anyone who has ever tried to watch a 3D movie from the sidelines will know. As a March 2015 article on CNet reported:

“Each of the viewer’s eyes sees something slightly different, creating a 3D effect.

Moving your head then reveals a slightly different image, giving you the impression that the elements of the image have physical dimensions as they pop from the background. It still looks more like looking through a window at a physical object, however, with the animated elements appearing to float behind the screen rather than apparently popping out into the real world.”(3)

Moreover, due to the company’s incorporation of nanotechnology, the holograms are able to detect the user’s finger touch. Although this is still a one-way process – with the hologram sensing the user, but not vice versa – such interactivity allows the user to manipulate the objects as if they were real. And Leia 3D promises that ‘In the near future we will also provide tactile feedback in mid-air to let you feel and touch virtual content outside of the screen!’(4)

But how different is this from competitive products? What Leia 3D has succeeded in doing is to create a unique interface in which the combination of hardware and custom software allow for a large field of view and dispense with the need for eye-tracking or specialized gadgets that create a disconnection between the viewer and the hologram. And this is where Honda’s Sugimoto and his team are especially excited. Since Leia 3D’s technology works without the user having to don vision-limiting head-gear or eye-glasses, the display is especially useful for use within the automotive industry. In addition to the ‘beyond cool factor’ of having a holographic driving assistant (move over, Siri, your time is limited!), potential applications could include a radical redesign of a vehicle’s central instrument cluster, the possibility of on-the-fly re-configuration, and seamless future upgrades.

But one problem remains. Although holograms may be ideal assistants when it comes to displaying visual information within a vehicle, there are times when we need audio assistance. To date, virtual assistants like Apple’s Siri have come to the rescue for travel directions, hands-free texting, and the like. In fact, in an interesting study reported on TheNextWeb.com, it was found that while only 3% of people feel uncomfortable using Siri in public the usage comfort rate surged to 62% in the relative privacy of the user’s vehicle.(5) But when on the road, voice recognition in applications like Siri or Microsoft’s Cortana or Google’s OK Google can be flaky. How many times have you made a request of Siri – or any of the others – only to be utterly baffled by the results returned? And no, the problem is not your diction. The problem is background noise.

Although our human ears are adept at filtering out unwanted background noise, our virtual assistants are not so fortunate.

And while there are steps we can take to help them – speaking clearly at a steady pace without pauses, using a microphone, and dispensing with social norms like ‘please’ and ‘thank you’ – there is little we can do to actively filter out ambient noise. Until now.

In 2010, Tal Bakish, an entrepreneur with a background working with Cisco and IBM, established VocalZoom, an Israeli company that delivers Human-to-Machine Communication (HMC) sensors to enable ‘accurate and reliable voice control and biometrics authentication in any environment, regardless of noise.’(6) With intended applications for mobile phones, secure online payments, access authentication and control, and hands-free automotive control, VocalZoom adds a new layer of tech to standard Automatic Speech Recognition (ASR) – also known as ‘voice recognition.’ And the way in which is does this is surprising. Instead of simply filtering out extraneous noise, VocalZoom uses two independent sensors to actively capture an additional layer of user data. The traditional audio sensor is supplemented with an optical sensor that is immune to ambient noise. Working collaboratively with its audio counterpart, the optical sensor measures speech-generated vibrations around the mouth, throat, neck, and lips of the speaker which the VocalZoom software then converts to a second layer of audio data, enhancing the clarity of the speaker’s voice.

But surely this device is bulky and unwieldy? Not at all. In fact, the current metrics show a diminutive sensor of just 10mm x 10mm, a form factor that VocalZoom intends to reduce by 50% moving forward. Reliability and easy operation in all real-world environments – could this be the future of voice recognition?

Perhaps, especially if combined with artificially-intelligent interfaces such as Leia 3D’s holograms. And this is where it gets exciting for our industry too. Holographic technology relies on optical interconnects – using light to transport information around a chip – deploying photonic engineering on a nano scale. Nanofabrication – the manufacturing of devices that are measured by the millionth of a millimeter – demands the use of cleanroom facilities, often at the Class 10, 1000, or 10000 level. And at this incredibly small scale, any contamination – whether it be particulate, vaporous, microbial, or bacterial – can do irreparable harm, costing time, resources, and expense. So R&D is linked inherently with cleanroom technology but the question is: Could the relationship be a two-way street? Could it be that applications developed by VocalZoom and Leia 3D might also play a significant role in day-to-day cleanroom operations? Let’s take a look…

Depending on the specifics of the industry in question, contamination-controlled environments can be noisy places to work in, with communication between technicians strained. Normal speech recognition in humans depends on a couple of things – the passing of auditory data and the optical analysis of facial expressions that support the words spoken. But in a cleanroom environment, facial expressions are obscured by personal protective equipment and voices are drowned out by HVAC systems making communication more difficult. A system such as VocalZoom could take some of the guesswork out of routine conversations, clarifying speech and cutting down on the potential for error through miscommunication. And just as we reported on the potential for artificially-intelligent wearables in our article ‘Could Wearable Technology be a Powerful New Tool in Contamination Control?’ donning these devices could become a just one additional part of the gowning process. Since many cleanrooms prohibit the use of cellphones within contamination-controlled areas, the adoption of sterile wearables that communicate with the outside world and interface with the web could present significant benefits.

And let’s stretch our imaginations just that little bit further.

What if Leia 3D were to extend its reach by creating AI-driven holograms that were customized to each cleanroom facility? What if the holographic image could be programmed with industry specifics, becoming the personification of cleanroom management? It would be simultaneously a repository of SOP information, data sheets, cGMPs, analysis, technical specifications, legal provisions, emergency procedures, and more – all in an on-demand, always accessible, virtual human-form. It could play the role of a supervisor, an access point, a library, or a simple timer, offering a sterile conduit to the outside world and a fool-proof QA. Need to know the current SOP for a Megestrol Acetate liquid spill? It’s at your virtual fingertips. Need precision timing on a batch run? The holograph has you covered. And in the unfortunate event of an accident or emergency, the hologram – interfacing with a technician’s sensor-equipped ‘smart bunny suit’ – could create an alert and relay critical biometric and medical data to emergency personnel, getting help automatically and immediately.

Is this scenario the stuff of science fiction? Not really. As we’ve seen from the advances made by Leia 3D and VocalZoom, the technology is already in place and it’s just a matter of customizing it for our contamination-controlled environments. We are naturally always striving for more intuitive ways to interface with digital information in order to perform our jobs most efficiently and, while there may still be work ahead, it is extremely likely that, once mature, this technology will be appearing in cleanrooms. Put simply, the stakes for our industry are too high for us to ignore any advancement that allows for increased precision and control. So the hologram helpers are on their way and the question is: Are you ready?

Pingback: Satellite Images – Doves Made In Home-Made Cleanrooms? - Cleanroom News | Berkshire Corporation